Meta Platforms is building more safeguards to protect teen users from unwanted direct messages on Instagram and Facebook, the social media platform said yesterday.

The move comes weeks after the WhatsApp owner said it would hide more content from teens after regulators pushed the world’s most popular social media network to protect children from harmful content on its apps, a Reuters report stated.

The regulatory scrutiny increased following testimony in the US Senate by a former Meta employee who alleged the company was aware of harassment and other harm facing teens on its platforms but failed to act against them.

Meta said teens will no longer get direct messages from anyone they do not follow or are not connected to on Instagram by default. They will also require parental approval to change certain settings on the app.

On Messenger, accounts of users under 16 and below 18 in some countries will only receive messages from Facebook friends or people they are connected through phone contacts.

Adults over the age of 19 cannot message teens who don’t follow them, Meta added.

Delhi HC cracks down on illegal streaming during ICC U-19, Men’s T20 World Cups

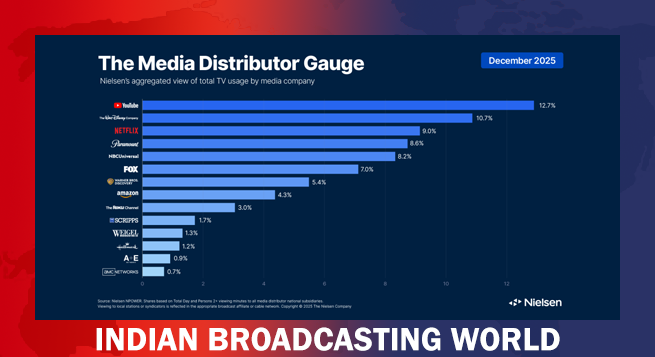

Delhi HC cracks down on illegal streaming during ICC U-19, Men’s T20 World Cups  Holiday Films, Football drive Dec viewership surge: Nielsen

Holiday Films, Football drive Dec viewership surge: Nielsen  ‘Black Warrant’ star Cheema says initial OTT focus intentional

‘Black Warrant’ star Cheema says initial OTT focus intentional  Cinema, TV different media to entertain audiences: Akshay Kumar

Cinema, TV different media to entertain audiences: Akshay Kumar  Zee Q3 profit down 5.37% on higher costs, lower ad revenues

Zee Q3 profit down 5.37% on higher costs, lower ad revenues  Nine minutes missing from Netflix version of ‘Dhurandhar’ sparks debate

Nine minutes missing from Netflix version of ‘Dhurandhar’ sparks debate  Vishal Mishra’s ‘Kya Bataun Tujhe’ sets emotional tone for ‘Pagalpan’

Vishal Mishra’s ‘Kya Bataun Tujhe’ sets emotional tone for ‘Pagalpan’  Anirudh Ravichander lends voice and music to ICC Men’s T20 World Cup 2026 anthem

Anirudh Ravichander lends voice and music to ICC Men’s T20 World Cup 2026 anthem  Blackpink unveils first concept poster for comeback mini-album ‘Deadline’

Blackpink unveils first concept poster for comeback mini-album ‘Deadline’  SS Rajamouli–Mahesh Babu’s ‘Varanasi’ set for April 7, 2027 theatrical release

SS Rajamouli–Mahesh Babu’s ‘Varanasi’ set for April 7, 2027 theatrical release