Prominent players in the AI industry, including OpenAI, Microsoft, Google, and Anthropic, yesterday announced the formation of the Frontier Model Forum. The primary objective of this forum is to regulate the development of large machine learning models, with a specific focus on ensuring their safe and responsible deployment.

Termed “frontier AI models,” these cutting-edge models surpass the capabilities of existing advanced models. However, the concern lies in their potential to possess dangerous capabilities, posing significant risks to public safety, Reuters reported.

Among the most well-known applications of these models are generative AI models, such as the one powering chatbots like ChatGPT. These models can rapidly extrapolate vast amounts of data to generate responses in the form of prose, poetry, and images.

Despite the numerous use cases for such advanced AI models, several government bodies, including the European Union, and industry leaders, like OpenAI CEO Sam Altman, have emphasized the need for appropriate guardrails to mitigate the risks associated with AI.

ICC warns Pak Cricket Board of legal action against it by JioStar

ICC warns Pak Cricket Board of legal action against it by JioStar  Dream Sports firm FanCode bags ISL global broadcast rights

Dream Sports firm FanCode bags ISL global broadcast rights  Guest Column: Budget’s policy interventions to boost Orange Economy

Guest Column: Budget’s policy interventions to boost Orange Economy  Delhi HC cracks down on illegal streaming during ICC U-19, Men’s T20 World Cups

Delhi HC cracks down on illegal streaming during ICC U-19, Men’s T20 World Cups  Fourth Dimension Media Solutions marks 15 years of industry leadership

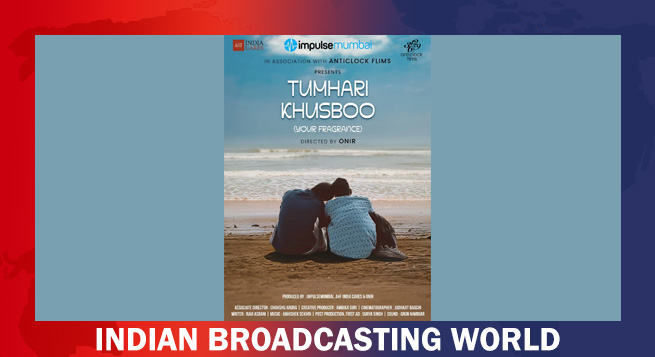

Fourth Dimension Media Solutions marks 15 years of industry leadership  Barun Sobti to headline Onir’s upcoming film ‘Tumhari Khushboo’

Barun Sobti to headline Onir’s upcoming film ‘Tumhari Khushboo’  Ananya Birla forays into cinema with launch of Birla Studios

Ananya Birla forays into cinema with launch of Birla Studios  Travelxp launches HD/4K on Makedonski Telekom in Balkans

Travelxp launches HD/4K on Makedonski Telekom in Balkans  India Today Group named exclusive media partner for WGS Dubai

India Today Group named exclusive media partner for WGS Dubai